- UAV testing is becoming increasingly important. However, drone testing often involves either expensive real-world demonstrations or limited, generic 3D simulations.

- The main advantage of this platform is its ability to replicate real-world environments in a virtual setting, making drone testing more realistic, accessible, and scalable.

- Two deep learning algorithms, YOLO v4 and Mask R-CNN, are used to create the 3D simulation environment.

Index

Current Need for Realistic Simulation Environments

With the rapid development of drone technology and applications in recent years, the demand for Unmanned Aerial Vehicles (UAVs) has surged exponentially. Consequently, drone manufacturers have increasingly relied on simulated environments for product testing due to their cost-effectiveness and safety compared to real-world testing. However, generic and manually created simulated environments often fail to present the challenges encountered in real-world scenarios.

So, how can we address this issue to ensure simulated settings more accurately reflect true-to-life conditions? This was the challenge tackled by researchers Justin Nakama, Ricky Parada, João P. Matos-Carvalho, Fábio Azevedo, Dário Pedro, and Luís Campos. Their efforts led to the development of a new approach driven by machine learning algorithms.

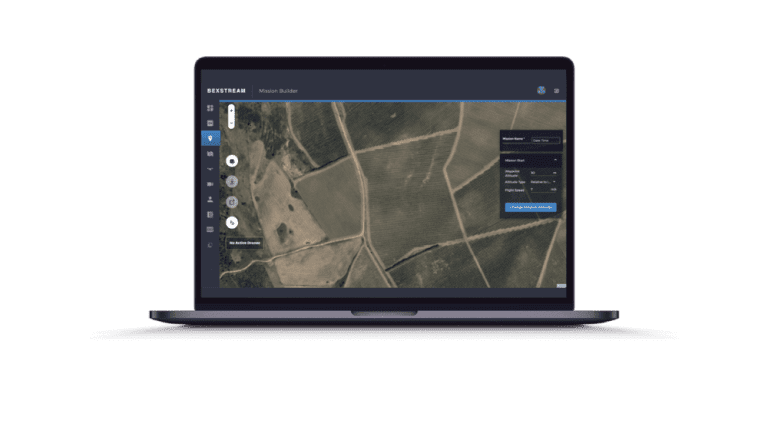

In their paper, Autonomous Environment Generator for UAV-Based Simulation, they propose a novel testbed where machine learning is used to procedurally generate, scale, and place 3D models, resulting in increasingly realistic simulations. Satellite images serve as the foundation for these environments, enabling users to test UAVs in a more detailed representation of real-world scenarios.

Although some improvements are still needed, their work demonstrates a promising proof of concept for an environment generator that can save time and resources in the planning stages by providing realistic virtual settings for testing.

Keep reading to discover more about the technology behind this disruptive testbed and its practical applications.

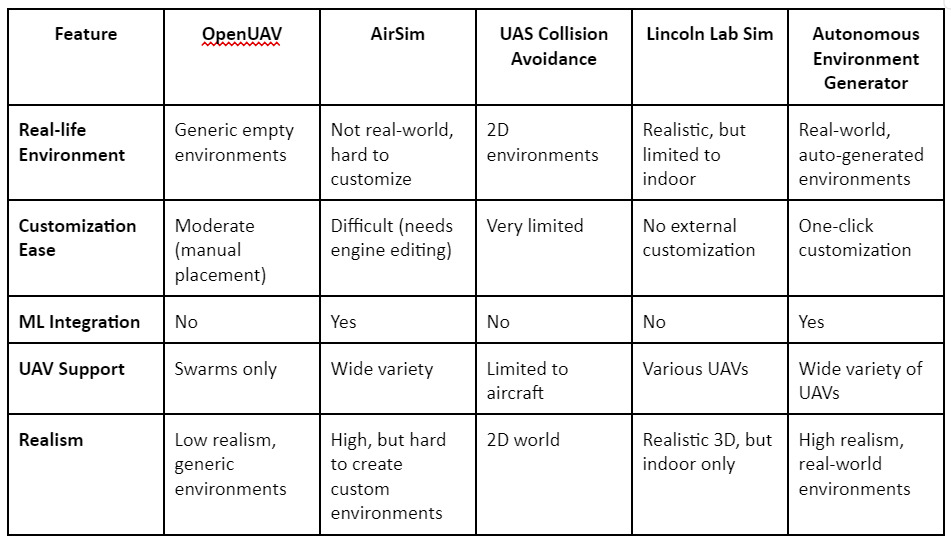

Comparison: How Existing Simulators Stack Up

Several UAV testbed platforms already exist, such as OpenUAV, AirSim, and the Lincoln Lab UAV Simulator. While these platforms offer features like UAV swarm testing or high-quality rendering, they all fall short when it comes to the automatic generation of realistic environments. Many require manual placement of 3D models and do not offer the flexibility of simulating actual geographic locations. This paper’s proposed platform fills these gaps by automating the entire process using satellite images, thereby generating accurate environments on demand.

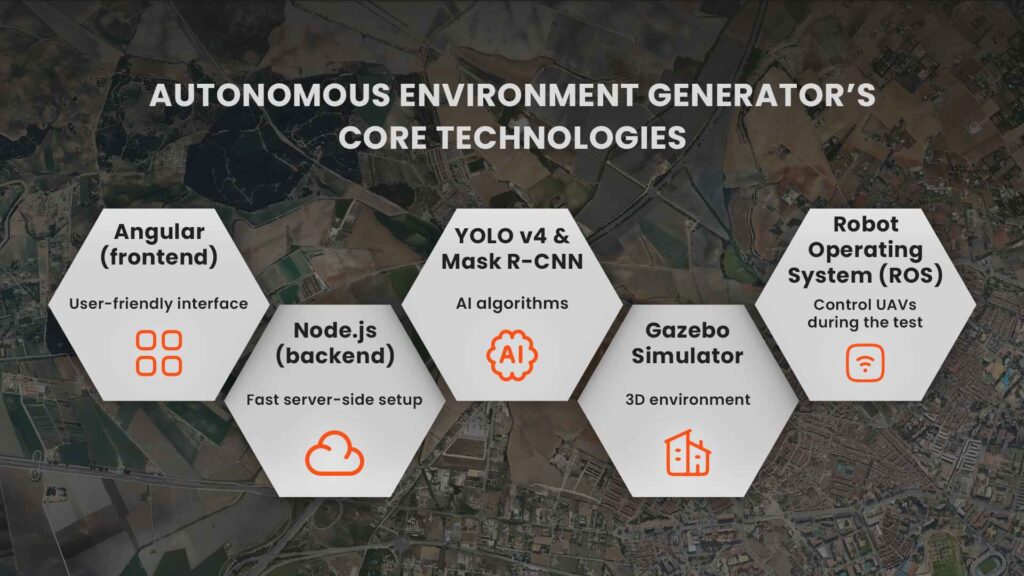

System Architecture and the Use of AI Algorithms

At the core of the Autonomous Environment Generator, is an architecture that allows for real-world representation of any geographic area. It starts with satellite images, which are processed using AI algorithms to detect and replicate objects like buildings, trees, and roads. These detected objects are then placed into the simulation using the Gazebo 3D robotics simulator, ensuring that the environment closely mirrors the real world.

Key Technologies Behind the Autonomous Environment Generator

The system architecture is composed of:

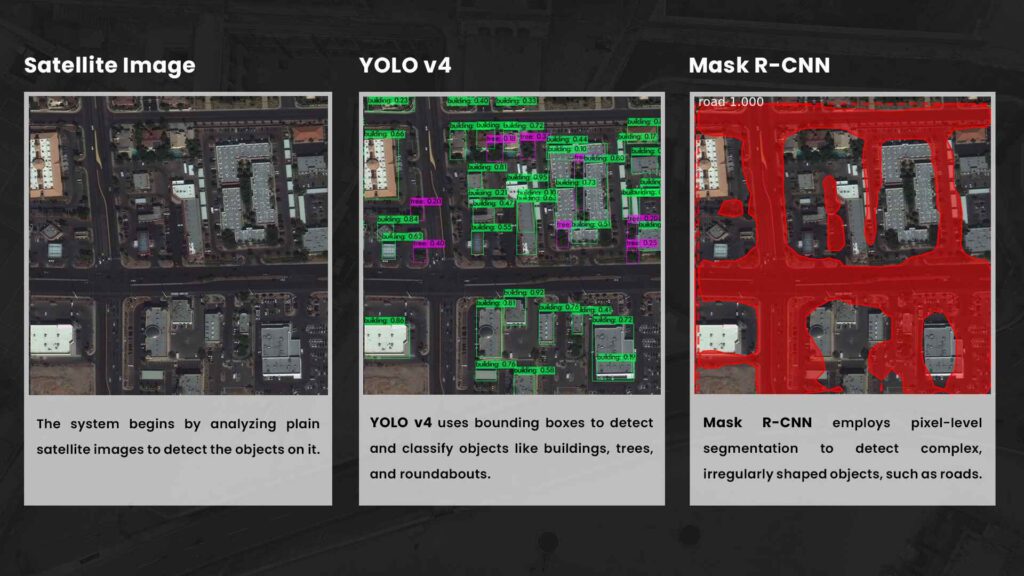

Machine learning is the driving force behind this platform’s ability to create dynamic, real-world environments. Two artificial intelligence algorithms, YOLO v4 and Mask R-CNN, are used to create the 3D simulation environment.

YOLO v4 is a state-of-the-art object detection model that uses convolutional neural networks to identify and locate objects within images. It works by dividing images into a grid and predicting object locations using bounding boxes for each region, allowing for fast and accurate detection. Specifically, YOLO v4 was trained on the DOTA dataset, which consists of aerial and satellite images, enabling it to specialize in detecting objects such as buildings, trees, and roundabouts from a bird’s-eye view.

Mask R-CNN, on the other hand, is used for road detection which requires pixel-level segmentation. It generates masks that provide more precision for complex shapes that don’t fit neatly into a bounding box. This model was trained using the Spacenet Road Network Detection Challenge dataset.

While YOLO v4 is excellent for detecting certain objects, its road detection is less accurate, which is why Mask R-CNN is incorporated into the architecture for more detailed segmentation.

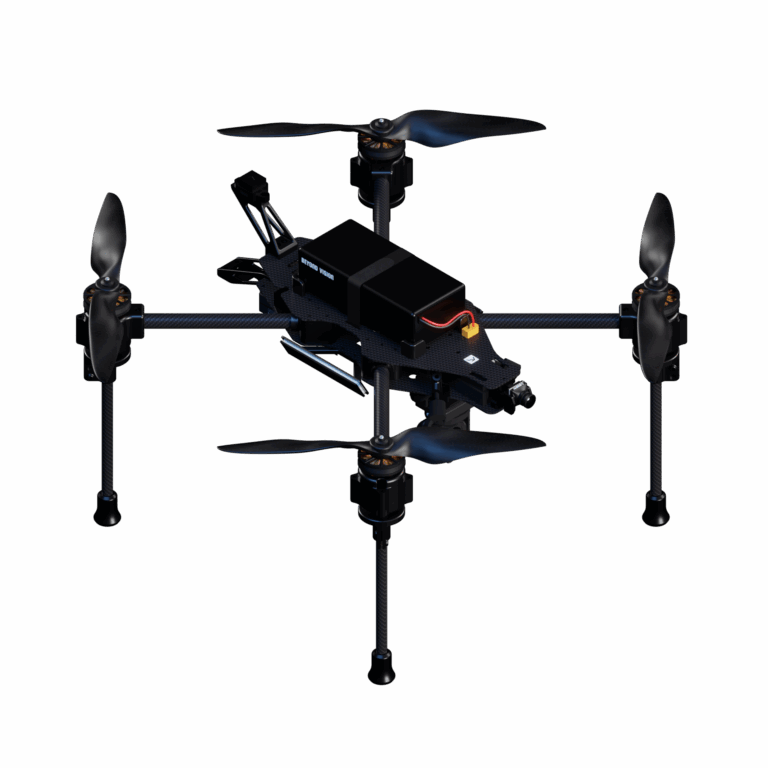

Heifu: the UAV Used in Simulations

The generator was trained on aerial image datasets and achieves a balance between speed and accuracy. Once the satellite images are processed and objects are detected, the data is sent to the backend, where it is stored for future use. The 3D models of buildings, trees, and other objects are then scaled and placed in the Gazebo simulator at their correct geographic positions. The simulation is controlled using the Robot Operating System (ROS), which enables users to manually or autonomously control UAVs during the test. UAVs interact with these models, allowing testers to simulate real-world flight scenarios, such as navigating through an urban environment.

Both models were tested on a variety of satellite images, and performed well overall. However, some challenges still remain, particularly with the placement of complex building geometries.

While YOLO v4 is excellent for detecting certain objects, its road detection is less accurate, which is why Mask R-CNN is incorporated into the architecture for more detailed segmentation.

Although the current system lacks some advanced graphical details, the strength of the platform lies in its scalability and realism. It eliminates the need for physical testing environments, making UAV testing cheaper, faster, and more accessible.

What’s Next? Improvements to the Autonomous Environment Generator

While the Autonomous Environment Generator is already a powerful tool, the authors outline some areas for future improvement:

These enhancements will make the platform even more versatile and capable of handling more complex UAV testing scenarios in the future.

An Autonomous Environment Generator poses a promising future for the testing of UAVs and is a system that will revolutionize the way drone manufacturers test and ensure the performance of their products.

Autonomous Environment Generator

A proposed system that automatically creates 3D environments for UAV testing using artificial intelligence (AI) and satellite imagery to replicate real-world scenarios.

YOLO v4 (You Only Look Once, Version 4)

A state-of-the-art object detection algorithm using convolutional neural networks (CNNs) to quickly identify and localize objects in an image, such as buildings and trees.

DOTA Dataset

A dataset of aerial imagery used to train YOLO v4 in identifying objects in satellite images, enhancing its performance in UAV testing environments.

Mask R-CNN

An advanced deep learning model that performs pixel-level segmentation, identifying and outlining specific areas within an image, such as roads and complex shapes.

Spacenet Road Network Detection Challenge Dataset

A dataset used to train the Mask R-CNN model for detecting roads in satellite images, helping create more detailed simulation environments.

Convolutional Neural Networks (CNNs)

A class of deep learning algorithms used for analyzing visual imagery. YOLO v4 and Mask R-CNN both rely on CNNs for object detection and segmentation.

Gazebo 3D Simulator

A robotics simulation tool that renders physical environments in 3D, allowing for the testing and development of UAVs in realistic settings.

ROS (Robot Operating System)

An open-source platform that provides the framework for controlling robots, including UAVs, enabling them to operate autonomously or be controlled manually.

3-Minute Summary

Don’t have time to read the full article now? Get the key points of it in this three-minute summary.

As drones and their applications gain dominance in industries like security, transportation, and logistics, UAV testing is becoming increasingly important. However, testing these drones often involves either expensive real-world demonstrations or limited, generic 3D simulations that don’t accurately replicate real-world environments. The paper, Autonomous Environment Generator for UAV-Based Simulation, presents a groundbreaking solution: an autonomous system that generates realistic testing environments for UAVs based on real-world satellite images.

By using machine learning algorithms like YOLO v4 and Mask R-CNN, the system can detect objects such as buildings, trees, and roads in satellite images, then place 3D models of these objects into a simulation. The Gazebo simulator is used to render these environments, which can then be navigated by drones controlled via the Robot Operating System (ROS).

The main advantage of this platform is its ability to replicate real-world environments in a virtual setting, making UAV testing more realistic, accessible, and scalable. Current platforms either lack real-world accuracy or require manual placement of 3D objects, but this system automates the process, significantly reducing the time and cost involved in testing.

Despite its success, there are areas for improvement, such as the need for more detailed object models and faster load times. In the future, the platform aims to include the ability to save and reload simulations, making it even more flexible for repeated testing.

Your Questions answered

A rapid Q&A diving into the cutting-edge technologies behind UAV testing, from autonomous environment generation to machine learning and satellite-based simulations.